Agent Chat Protocol

Overview

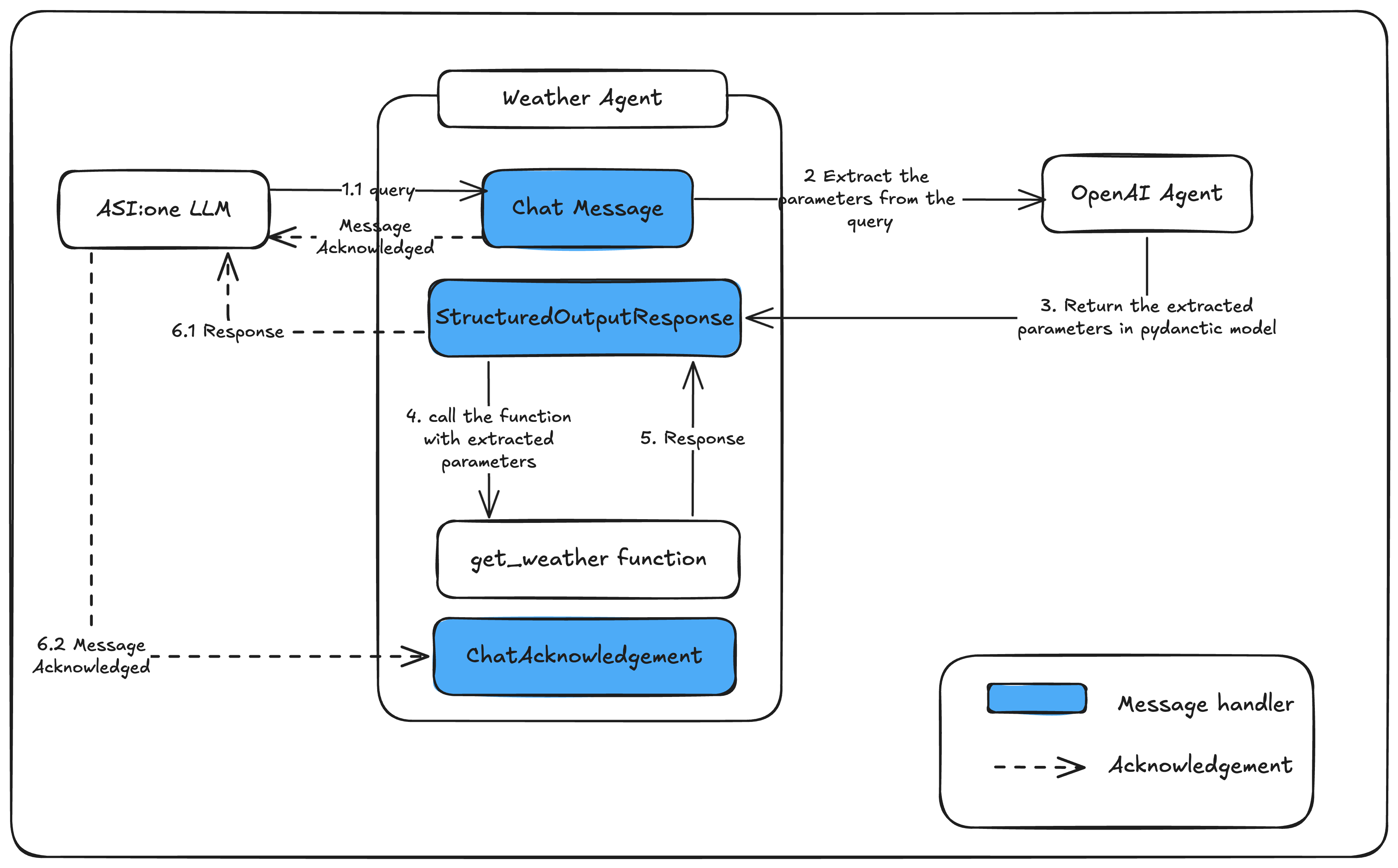

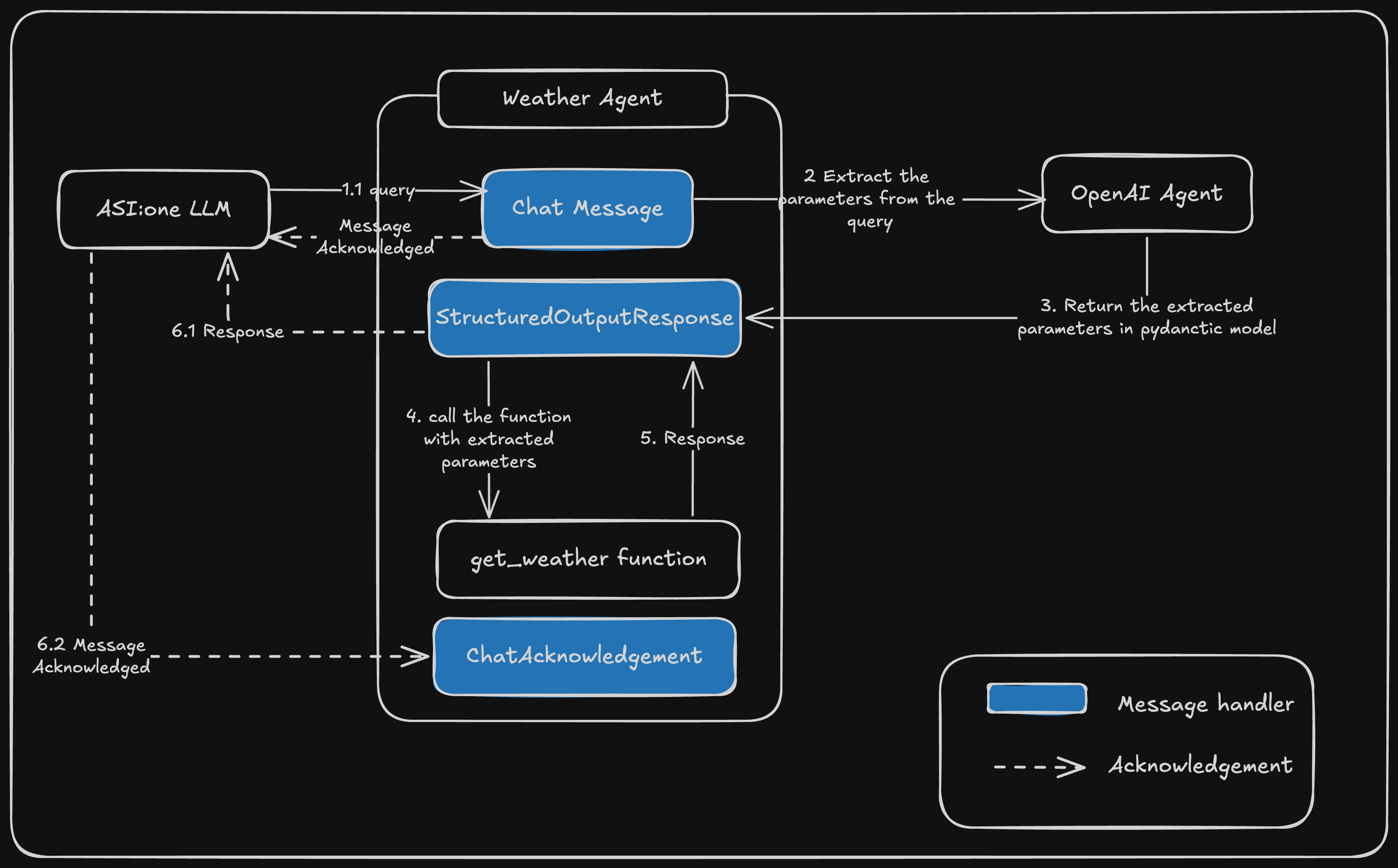

The Agent Chat Protocol enables AI agents to understand natural language and interoperate across ecosystems. With this protocol, an agent can receive user messages, acknowledge them, optionally request structured outputs from other agents, and reply back in a consistent format.

- Learn more about the protocol spec: Agent Chat Protocol (manifest)

- Try chatting with a live agent on Agentverse: Example Weather Agent

What you’ll build

We’ll build a simple agent that:

- Receives chat messages in natural language

- Acknowledges the message using the protocol

- Requests a structured output (JSON) from another agent (an OpenAI-backed agent)

- Uses the structured output to fetch current weather

- Sends a natural language reply back to the user

Prerequisites

- Python 3.10+

- Install dependencies:

1) Create an agent

We start by defining a minimal agent.

- The

Agentis the main runtime that sends and receives protocol-compliant messages.

2) Include the Chat Protocol

Attach the Agent Chat Protocol so the agent can send and receive ChatMessage and ChatAcknowledgement messages.

Protocol(spec=chat_protocol_spec)adds handlers for a standard chat schema (text content, session start/end, acks).

3) Include a Structured Output Client Protocol

To request structured data (JSON) from another agent, we define a small client protocol and two models: one for the prompt + schema, and one for the response.

- We’ll send

StructuredOutputPromptto a remote AI agent. It will return aStructuredOutputResponsewith a JSON object matching our schema.

4) Helper: create a text ChatMessage

A small helper to create consistent ChatMessage replies. Note the EndSessionContent uses type="end-session".

5) Handle incoming chat and forward to the AI agent

- On each inbound

ChatMessage, we acknowledge it - We forward the user’s text to an AI agent capable of returning structured output that matches our weather request schema

6) Handle structured output response and reply

- When the remote AI agent replies with a JSON object, we parse it, fetch weather, and send a natural-language message back to the original user. The final formatting is done on the agent side.

7) Wire up protocols and run

Weather utility module (functions.py)

This helper module defines the expected schema for the structured output and a function to fetch weather from Open-Meteo. It returns a single pre-formatted string under weather.

Complete example (copy-paste)

Combine everything into two files you can run on hosted agent or locally.

This Weather Agent is an Agentverse hosted agent. You can create your own hosted agent by following the guide here:

Hosted Agents

.

Why the Agent Chat Protocol is useful

- Natural language first: users can speak or type naturally; your agent wraps messages in a standard structure

- Interoperability: any agent implementing the protocol can communicate, regardless of internal implementation

- Extensible: add client protocols (like structured output) to connect to specialized agents

- Reliability: acknowledgements and session controls help build robust, user-friendly experiences

Tip: You can also use this agent through ASI:One by mentioning it directly in your prompt, for example:

To try a live conversation experience, visit the Example Weather Agent on Agentverse.